CEINT NanoInformatics Knowledge Commons (NIKC)

WHAT is the CEINT NanoInformatics Knowledge Commons?

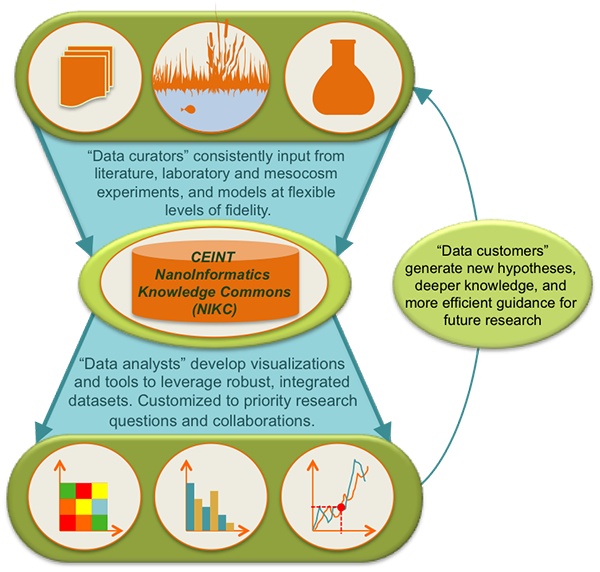

The CEINT NanoInformatics Knowledge Commons (NIKC) is a custom cyberinfrastructure consisting of a data repository and associated analytical tools developed to visualize and interrogate integrated datasets.

The DB is built in MySQL, and interacts with applications written in a variety of languages including R, python/django and visual basic.

How are data curated into the NIKC?

Currently, data are entered or curated into the DB by organizing the necessary information within Excel templates that are usable by a team of curators, then mapped via custom API by the primary CEINT NIKC architect.

Future efforts will focus on developing web-enabled curation tools for direct population of the NIKC with datasets, targeted to datasets (of CEINT as well as key collaborators) that represent a critical mass of information in pursuit of our priority research questions.

What data are curated into the NIKC?

Data are curated describing:

• Nanomaterials in terms of their intrinsic, extrinsic (system-dependent), and social (e.g. anticipated use scenarios, matrix, concentration in products) properties,

• System characteristics (environmental, biological, laboratory, etc.)

• Exposure and Hazard measurements, calculations, and estimates

• Meta-data associated with each of these, including bibliometrics, protocols, equipment, temporal and spatial descriptors, etc.

The DB structure has been built with consideration of existing ontologies and controlled vocabularies within the nanoinformatics field, starting with a close collaboration with the Nanomaterial Registry to ensure straightforward data sharing and including mapping to the ISA-TAB-nano file sharing format where applicable.

The structure can accept data with a variety of levels of granularity, or fidelity, in terms of experimental complexity and in terms of data richness (e.g. directly measured raw data to higher level calculated values summarizing the raw data). The NIKC can accommodate directly measured values ranging from the complexity of a mesocosm field experiment to straightforward laboratory experiments.

We curate the data in at the most granular level we can afford to time- and effort-wise for a given project, enabling maximal cross-study comparisons through queries on the backend.

How are data analyzed in the NIKC?

The NIKC is designed to enable flexible analysis across integrated datasets to support the discovery of trends, hypotheses, and conclusions that would not have been possible without reusing data across multiple studies.

Applications are built to sit on top of the database on draw on otherwise disparate datasets and data types to probe our driving research questions. These resource-intensive efforts are targeted to key collaborations with a vision for multi-purpose utility. In one example, the NanoPHEAT tool (Nano Product Hazard and Exposure Assessment Tool) is built to compile dose-response curves from literature, including hundreds of papers, and calculates the estimated exposure to nanoparticles as released from real products, based on some in-house experimental values that we have created and on model-generated values. For a wide array of toxic endpoints, the tool superimposes the forecasted realistic exposure onto dose-response curves generated from curated relevant literature.

CEINT Background: Vision and motivation for nanoinformatics

CEINT is a 10 year center funded by NSF and EPA with a dual mission to elucidate principles of nanomaterial behavior in the environment and to translate these into guidance on decisions surrounding environmental implications of nanomaterials. This dual mission serves as a compass in navigating the tradeoffs and resource allocation decisions necessary in developing a data management approach within a field that has enormous data diversity and many sources of uncertainty.

CEINT is an integrated test bed that makes it a perfect place to start in many ways for exploring nanoinformatics efforts. It is difficult to develop ontologies, controlled vocabularies, cyber infrastructures, and analytical tools that enable the design of things that are uncertain -- queries we haven't even imagined yet, asking questions about parameters we may only now be learning are important. We are potentially measuring with methods and protocols that are still being developed, so a lot of flexibility needs to be built in. CEINT provides a testing set of data that while diverse in terms of methods, endpoints and materials, are integrated in terms of material supply chain and research goals. The luxury of a well-defined scope means that CEINT can say definitively, based on our research mission, what will define success for our resource. This combination of the unified scope and integrated test bed of data, which has grown from an intertwined system of projects, has allowed CEINT to make measurable progress toward specific research goals while developing methodologies that are generalizable across the field.